Some might say that social media is the root of all evil, the reason for low self-esteem and a wide range of mental health problems among users. The reality, however, is much more ambiguous.

There are two sides to every story. Social media can be a source of many problems, yet there’s a whole lot of good things that come with it too. Research provides solid evidence:

- 81% of teenagers feel that social media has a positive impact on their lives.

- A recent report from the Royal Society for Public Health lists several positive aspects of social media use. According to the research, many see social media as a space for self-expression, where individuals find moral support, form communities, and build lasting relationships.

- Research from Harvard University proves that social media itself doesn’t have a negative impact on mental health. Routine, non-addictive social media use doesn’t affect well-being negatively. Quite the contrary, it may help overcome distance and time barriers and compensate for a lack of face-to-face interactions.

But, of course, possible negative effects exist and should not be underestimated. In this article, we’re going to cover the following topics:

- What are the main negative effects of social media?

- Which groups of users are particularly vulnerable?

- What actions do social media platforms take to protect their communities?

- What are the responsibilities of social media managers?

Ready to learn how to take care of well-being on social media?

Let’s dive in.

The biggest threats of social media

This is where we move on to some of the less optimistic statistics. Unsurprisingly, many of them concern teenagers. This group is particularly vulnerable, and not without a reason.

Young people in the process of developing adult personalities are still building their identity and seek validation to prove self-worth. As we’ve mentioned before, social media may seem like an attractive place to express themselves, yet there are plenty of threats that come with that.

These research results clearly illustrate the threats:

- A study from the University of Michigan has shown that the more often people use Facebook, the more unhappy they feel. Their test group’s overall life satisfaction significantly declined over the course of just two weeks. Other studies suggest that extensive social media use can lead to anxiety disorders.

- Research published by the American Psychological Association proves that comparing yourself to others on social media can increase symptoms associated with depression. This effect is particularly strong in people with a tendency to overthink, also known as rumination.

- 5 hours is a specific benchmark to consider. Teens who spend more time on social media daily are twice as likely to deal with depressive symptoms. This effect also seems to be linked to gender, as almost 60% more girls experience the issue.

- When you look at statistics concerning harassment on social media, being a girl on the internet seems quite terrifying. 57% of women claim they’ve experienced some form of harassment on Facebook, while 1 in 10 had to deal with abuse on Instagram. Working to Halt Online Abuse has reported that women make for 70% of all online abuse victims.

How are social media platforms addressing these issues?

Of course, social media giants are not blind to these phenomena. Here are some of the actions they’ve taken to protect mental health within their communities:

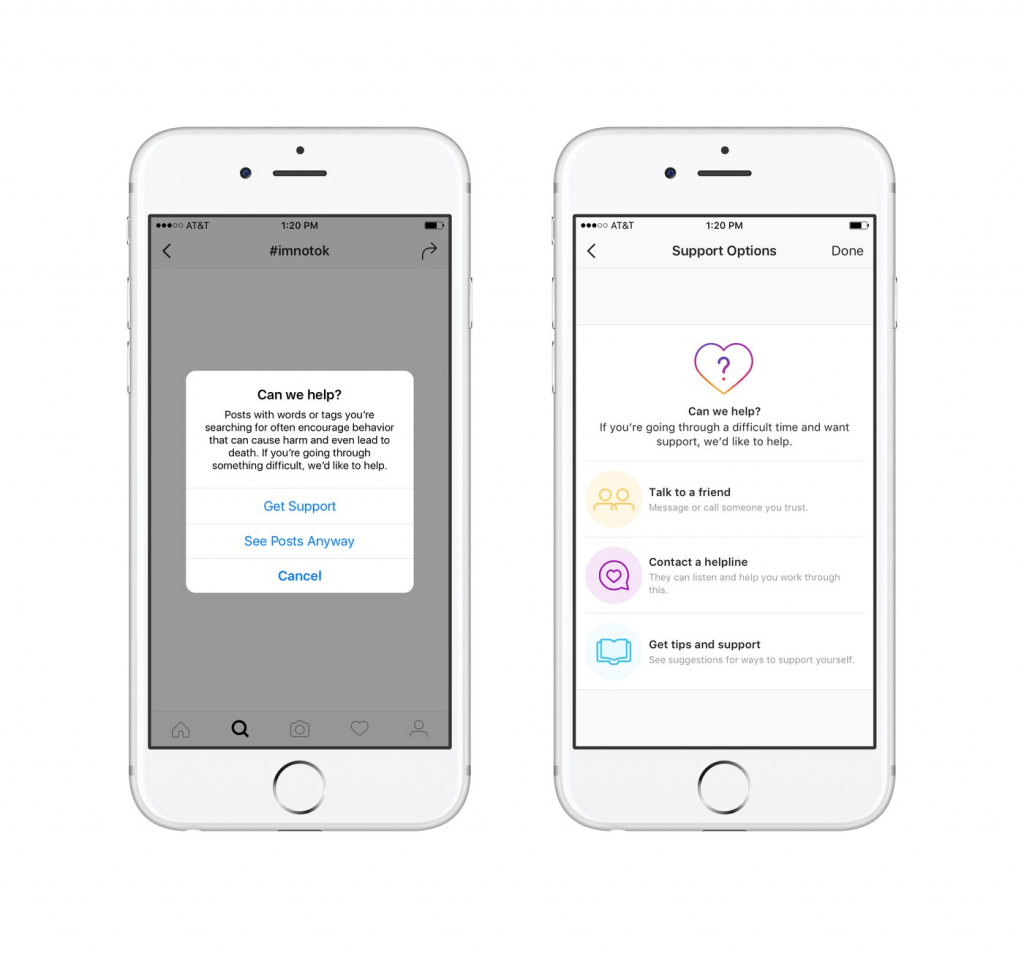

Can we help?

Let’s start with Instagram, as they’re doing quite a lot in this matter. The platform has already implemented a suicide prevention tool back in 2016 (which was modelled on Facebook’s solution from 2015):

There are two different ways this feature works. First of all, it detects increased interest in hashtags associated with sadness, depression, self-harm, and related topics. A user might get a notification asking whether they need help. In this situation, Instagram encourages such users to contact a helpline, read their mental health tips, or simply talk to a trusted friend.

Speaking of friends, Instagram also gives its userbase a tool to help people they know – even anonymously. When you see a post that seems alarming, you can report it. The person who published it won’t know who flagged their content, yet they will still receive a notification. Instagram will let them know that someone has seen their post and thought they might be having a hard time.

This social platform is doing quite a lot to make users feel that they’re a part of a supportive community. Their efforts are supported by a number of mental health authorities, such as the National Suicide Prevention Hotline. There are also dedicated solutions for every country, connecting users with region-specific authorities.

Automatically hide hateful comments

Protect your community. Keep hate out of your Facebook and Instagram with our automated moderation solutions.

Try NapoleonCat free for 14 days. No credit card required.

It’s not about the numbers

Let’s fast forward a couple of years. In 2019, Instagram rolled out quite a surprising test. In some countries, they started removing like counts from public profiles. Adam Mosseri, the CEO of Instagram claimed that it was done to improve the emotional well-being and mental health of the platform’s users.

Although promising, this bold change is somewhat controversial within the influencer marketing industry. Marketers worry that it might become harder to track the effectiveness of their campaigns, as they would have to rely on the influencer’s self-reported data.

Mosseri’s response to this concern was quite firm:

We will make decisions that hurt the business if they help people’s well-being and health.

An interesting shift, isn’t it? The global rollout is still in progress, as the update is one of the bigger changes to the platform in years. If Instagram decides to stick with this solution, we may see a shift to more authentic, less self-censored content on the platforms. We’re curious to see how things will develop.

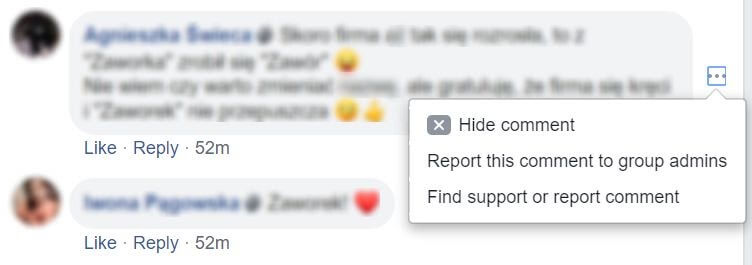

Hate speech is not welcome anywhere

What about offensive comments? Both Facebook and Instagram allow you to block certain words from comments on your Page. It’s a simple yet effective filter to create a safe space and make moderation much easier. Users can also hide or report offensive content, which is yet another way of making Facebook a safe online space.

Alright, we need to talk about Twitter now. It’s already gained notoriety for being a fertile ground for online trolls. They platform stayed behind for years, but they’ve learned their lesson and are doing quite a lot to keep up now. Twitter’s Advanced Quality filter detects offensive bits of content automatically. They’ve also introduced an extended hateful conduct policy to address violent threats and other kinds of harassment more effectively.

The responsibilities of a social media manager

We all know that social media managers have a lot on their plates. Even though it’s a huge responsibility, moderation is just the tip of the iceberg. Nobody has the time to keep track of all comments 24/7. Luckily, there are tools designed to help with that.

NapoleonCat’s Social Inbox

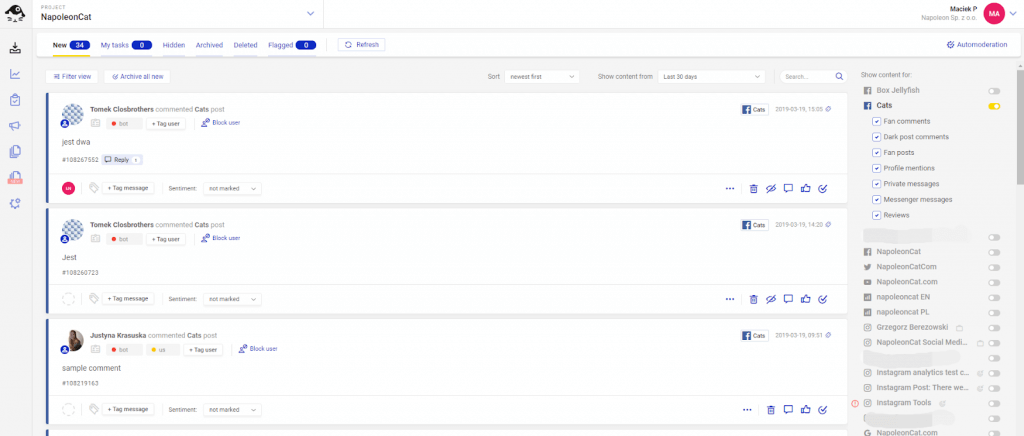

When you manage a large number of social media profiles, it can be incredibly hard to keep track of all the incoming messages and comments. The easiest way to do it is through a multi-platform social inbox. The one that comes with NapoleonCat gives you access to fan activity from Instagram, Facebook, Twitter, LinkedIn, YouTube and Google My Business profiles. Every incoming comment or message can be accessed directly from NapoleonCat’s desktop or mobile app, where it can be processed between team members.

Using a solution like the Social Inbox gives teams all the tools they need to streamline the moderation process. It also helps in keeping tabs on multiple social profiles and making sure that no message goes unanswered – and no hateful comment stays on your profile unattended.

Automatic moderation rules

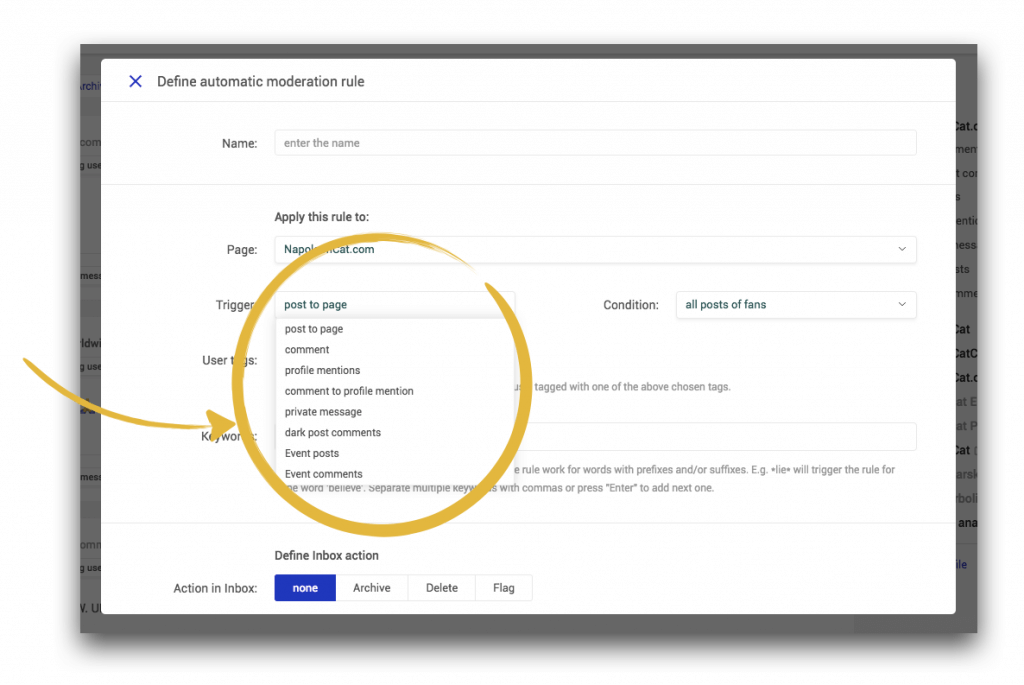

Notifications are helpful, yet they still need human action. Automatic moderation rules do the legwork for you. You can use them to:

- set up automatic replies for common, generic questions

- automatically hide or delete inappropriate content.

If you notice particularly harmful language showing up in your comment sections on Facebook or Instagram, you can easily set up automatic actions to fight them. Any phrase defined by you can be a trigger that will set off an automatic reaction – hiding, deleting, or answering the comment in question.

Find out exactly how to get started in this guide to setting up automatic moderation rules.

Wrap up

Over the years, many threats have emerged on social media. Certain groups of users are particularly vulnerable, and the teams behind the largest social platforms acknowledge the facts and are working towards making social networks a safer space.

What’s the single most important takeaway from this article? Social media in itself isn’t bad, but it’s not all good either. It’s what you make it. We hope that these tools and knowledge will help you protect your social communities and contribute to the ongoing fight against online hate.